5349

Aurora 大题

1. [2 pts] Max data size stored in Aurora

Answer: 64 TB

Explanation: Aurora stores data in 10GB segments replicated across 6 storage nodes per PG. Total maximum size is 64 TB as per AWS Aurora architecture design.

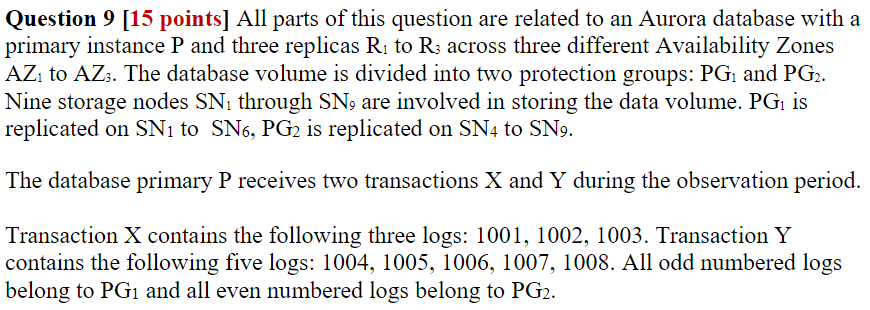

2. [3 pts] Logs sent by P to R1

Answer: All logs: 1001–1008

Explanation: Replicas (R1–R3) receive all logs from primary to update their in-memory buffers, regardless of PG.

3. [3 pts] Logs sent by P to SN1

Answer: 1001, 1003, 1005, 1007

Explanation: SN1 belongs to PG1 only. All odd LSNs belong to PG1, so only odd-numbered logs are sent to SN1.

4. [3 pts] Distribution of SN4, SN5, SN6

Answer:

- SN4 → AZ1

- SN5 → AZ2

- SN6 → AZ3

Explanation: Each PG must span 3 AZs with 2 copies in each. Current known distribution shows AZ1, AZ2, AZ3 each has 2 nodes. Assign SN4–SN6 one to each AZ to satisfy 6 nodes in 3 AZs, 2 per AZ.

5. [4 pts] CPL and VDL values

Given:

Received LSNs: 1001–1004, 1006–1008

Missing: 1005

Answer:

- CPL: 1003

- VDL: 1003

Explanation:

Transaction X (1001–1003) fully received → CPL = 1003

Transaction Y missing 1005 → not fully committed → no CPL

So latest committed transaction is X → VDL = 1003

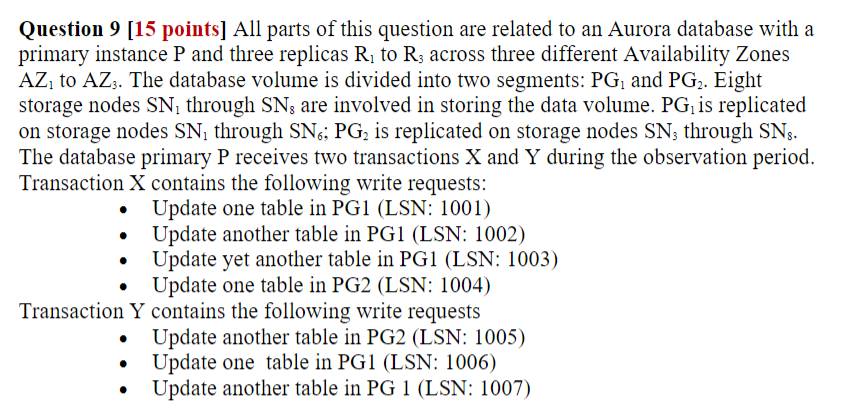

1. [5 pts] Storage node distribution across AZs

Answer:

One possible AZ distribution to satisfy Aurora’s replication:

- AZ1: SN1, SN6, SN7

- AZ2: SN2, SN5, SN8

- AZ3: SN3, SN4

Explanation:

Aurora requires each Protection Group to span 3 AZs, with 2 nodes per AZ.

- PG1 (SN1–SN6) → 6 nodes across 3 AZs, 2 per AZ

- PG2 (SN3–SN8) → same requirement

This distribution satisfies both PGs’ replication needs.

2. [5 pts] How SN5 fills in missing logs

Given:

SN5 received logs: 1001, 1002, 1004, 1006

→ Missing: 1003, 1005, 1007

Answer:

SN5 detects missing LSNs by checking sequence gaps.

It sends “Read Index” or “Gap Fill” requests to peer storage nodes in the same PG (e.g., SN1–SN6 for PG1, SN3–SN8 for PG2) asking for missing logs.

If at least 3 other nodes have the log, SN5 can fetch and fill the gap.

Example:

- Missing 1003 (PG1) → request from SN1, SN2, SN6

- Missing 1005 (PG2) → request from SN4, SN6, SN8

- Once received and verified, SN5 appends logs locally.

3. [5 pts] CPL and VDL values

Given:

LSNs 1001–1007 all received by storage service

Answer:

- CPL values: 1004, 1007

- VDL: 1007

Explanation:

- Transaction X (LSNs 1001–1004) fully received → CPL = 1004

- Transaction Y (1005–1007) also fully received → CPL = 1007

- Since both PG1 and PG2 have received logs for both transactions, latest consistent CPL = 1007

→ So VDL (last durable committed transaction) = 1007

Question 1 [6 points] Name three typical service models in cloud computing. Give an example service of each model.

The three main cloud service models are:

(1) IaaS, like Amazon EC2, where users manage virtual machines;

(2) FaaS, like AWS Lambda, where users run code without managing servers;

(3) SaaS, like Google Docs, where users access complete software over the internet.

Question 2 [5 points] Describe two typical traffic patterns in modern data centre. Explain how they affect the networking technology evolution in cloud computing era.

课件里面没有应该不考)

Modern data centers handle north-south traffic (between users and servers) and east-west traffic (between internal components). In cloud computing, east-west traffic dominates due to microservices and distributed architectures. This leads to innovations like software-defined networking, high-speed switching, and VPC peering to improve internal traffic efficiency.现代数据中心处理南北向流量 (用户与服务器之间)和东西向流量 (内部组件之间)。在云计算中,由于微服务和分布式架构,东西向流量占主导地位。这催生了软件定义网络、高速交换和 VPC 对等连接等创新,以提高内部流量效率。

Question 3 [8 points] Explain hardware-assisted virtualisation. Name a hardware assisted virtualisation system and describe its components in detail.

Hardware-Assisted Virtualisation

Definition:

Hardware-assisted virtualization is a technology where the CPU provides special instructions (e.g., Intel VT-x, AMD-V) to support efficient virtual machine (VM) execution. It reduces overhead and improves performance compared to purely software-based virtualisation.

Example System: KVM (Kernel-based Virtual Machine)

Components:

- KVM Module

Turns the Linux kernel into a hypervisor, enabling VM support using CPU virtualization extensions (VT-x/AMD-V). - QEMU (Quick Emulator)

Emulates hardware devices (e.g., disk, network) for VMs. - Libvirt

Provides a management interface for creating, starting, and stopping VMs. - vCPU and pCPU Mapping

Each VM has virtual CPUs (vCPUs), which are dynamically mapped to physical CPUs (pCPUs). - Memory Management

Guest OS thinks it controls memory, but actual memory mapping is managed by the hypervisor.

How It Works (KVM):

- A guest OS is launched as a Linux process.

- CPU runs guest code in a special mode.

- Memory and I/O are virtualized by QEMU.

- Admins use Libvirt to manage VM lifecycle.

Question 4 [10 points] Describe shared responsibility model in cloud computing. Use a particular AWS service covered in this unit to explain how responsibilities are shared between cloud providers and cloud customers.

AWS Shared Responsibility Model

Cloud security is a shared responsibility between AWS and the customer.

AWS is responsible for “Security of the Cloud”

Includes:

- Physical security of data centers

- Hardware, software, and network infrastructure

- Virtualization and storage layer

- Host OS (for managed services like RDS or Lambda)

Customer is responsible for “Security in the Cloud”

Includes:

- Operating system (if using EC2)

- Applications and data

- Security groups and firewall rules

- IAM users, roles, and permissions

- Encryption and network configuration

Example: Amazon EC2

- AWS handles:

- Data center

- Physical host security

- Hypervisor and virtualization layer

- Customer handles:

- Installing and patching the OS

- Application configuration and updates

- Configuring security groups and IAM roles

- Protecting access (SSH keys, MFA)

Question 5 [8 points] Describe the concept of elasticity. Give examples of two AWS services with the term “elastic” as part of the service name. For each service, describe what elastic features are available in this service.

What is Elasticity?

Elasticity is the ability of a system to automatically scale resources up or down based on demand.

弹性是系统根据需求自动扩大或缩小资源的能力。

Example 1: Amazon EC2 (Elastic Compute Cloud)

- Elastic feature:

Auto Scaling Group can automatically launch or terminate EC2 instances based on traffic, CPU usage, or a schedule. Auto Scaling Group 可以根据流量、CPU 使用率或计划自动启动或终止 EC2 实例。 - Result:

You pay only for what you need. It helps maintain performance under load and saves cost when demand drops. 您只需按需付费。这有助于在负载下保持性能,并在需求下降时节省成本。

Example 2: Elastic Load Balancing (ELB)

- Elastic feature:

Automatically distributes incoming traffic across healthy targets (e.g., EC2, containers).

自动在健康目标(例如 EC2、容器)之间分配传入流量。 - Result:

Improves availability and fault tolerance by adjusting to changing traffic patterns. 通过适应不断变化的流量模式来提高可用性和容错能力。

IAM policy

{ |

This policy allows starting, stopping, or terminating EC2 instances only if:

- The instance type is

t2.microort2.small, and - The instance is tagged with

Unit=COMP5349.

{ |

This policy allows a user to start or stop EC2 instances only if the instance is type t2.micro or t2.small and has the tag Project=DataAnalytics. No other instances are affected.

Question 1 [6 points ] Discuss the distinction between an Amazon IAM group and an Amazon IAM role, and identify one situation that can be accomplished using both mechanisms, and another situation that can only be done with one of them.

IAM Groups are used to assign permissions to multiple users. IAM Roles are used for temporary access, often by AWS services or external users. Both can be used to grant access to S3. But only roles can be assumed by services like Lambda, which groups cannot do.

| Feature | IAM Group | IAM Role |

|---|---|---|

| Purpose | Group multiple IAM users to manage permissions together | Temporary access for users or services |

| Who uses it | IAM users in the same account | IAM users, AWS services, federated users |

| Credential | Uses IAM user’s own credentials | Uses temporary credentials assumed at runtime |

Question 2 [8 points] Describe the distinctions between the virtualization technologies employed by Xen hypervisor and Nitro system.

| Feature | Xen Hypervisor | Nitro System |

|---|---|---|

| Type | Traditional Type-1 hypervisor | Hardware + software system using hardware-assisted virtualization |

| Architecture | Uses Dom0 (control domain) to manage guest VMs | No Dom0, management tasks moved to Nitro Controller |

| Isolation | Guest VMs share hypervisor layer, less strict isolation | Each VM runs on a dedicated microVM with strong isolation |

| Performance | Good, but more overhead due to Dom0 and software-based I/O | Better performance due to offloading I/O to hardware |

| Security | Medium – depends on Dom0 integrity | High – minimal trusted computing base, better isolation |

| Used by | Early generations of EC2 instances | Current EC2 instance families (e.g., M5, C5, T3, etc.) |

Xen uses a traditional hypervisor model with a special control domain (Dom0) to manage guest VMs. It relies more on software-based virtualization. In contrast, the Nitro system uses hardware-assisted virtualization with dedicated microVMs and offloads networking and storage to dedicated Nitro cards, providing better performance and stronger isolation. Xen 使用传统的虚拟机管理程序模型,并使用特殊的控制域 (Dom0) 来管理客户虚拟机。它更多地依赖于基于软件的虚拟化。相比之下,Nitro 系统使用硬件辅助虚拟化,配备专用的 microVM,并将网络和存储负载转移到专用的 Nitro 卡上,从而提供更佳的性能和更强的隔离性。

Question 3 [5 points] Explain the meaning of Infrastructure as Code. Give an example of AWS IaC service.

Infrastructure as Code (IaC) is a way to manage cloud infrastructure using code. In AWS, CloudFormation is an example of IaC. Users define infrastructure (e.g., EC2, VPC, RDS) in a template, and AWS automatically sets it up. This improves automation, consistency, and repeatability. 基础设施即代码 (IaC) 是一种使用代码管理云基础设施的方法。在 AWS 中,CloudFormation 就是 IaC 的一个例子。用户在模板中定义基础设施(例如 EC2、VPC、RDS),AWS 会自动设置。这提高了自动化程度、一致性和可重复性。

Question 4 [6 points] Identify and describe two of the various mechanisms that are necessary for ensuring a highly available environment.

High availability can be achieved by deploying services across multiple Availability Zones and using load balancers. Multi-AZ ensures redundancy in case one zone fails, while load balancers distribute traffic to healthy instances, preventing overload and improving fault tolerance. 通过跨多个可用区部署服务并使用负载均衡器,可以实现高可用性。多可用区可确保在一个可用区发生故障时提供冗余,而负载均衡器可将流量分配到运行正常的实例,从而防止过载并提高容错能力。